Last Update 6 hours ago Total Questions : 109

The Implementing Data Engineering Solutions Using Microsoft Fabric content is now fully updated, with all current exam questions added 6 hours ago. Deciding to include DP-700 practice exam questions in your study plan goes far beyond basic test preparation.

You'll find that our DP-700 exam questions frequently feature detailed scenarios and practical problem-solving exercises that directly mirror industry challenges. Engaging with these DP-700 sample sets allows you to effectively manage your time and pace yourself, giving you the ability to finish any Implementing Data Engineering Solutions Using Microsoft Fabric practice test comfortably within the allotted time.

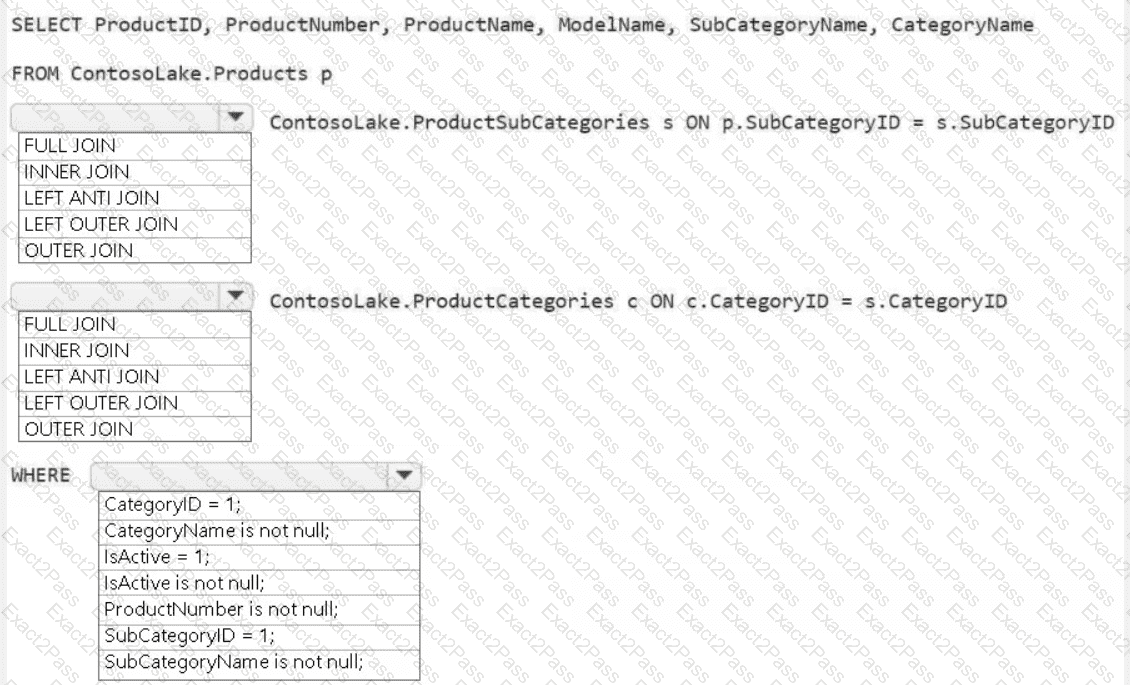

You need to create the product dimension.

How should you complete the Apache Spark SQL code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

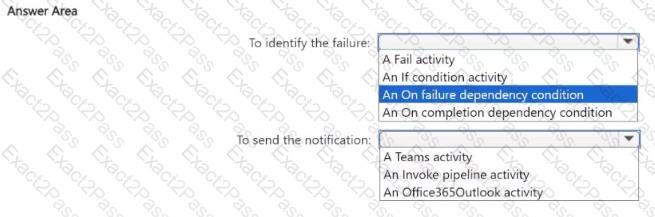

You need to ensure that the data engineers are notified if any step in populating the lakehouses fails. The solution must meet the technical requirements and minimize development effort.

What should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to recommend a solution for handling old files. The solution must meet the technical requirements. What should you include in the recommendation?

You have a Fabric workspace named Workspace1. Your company acquires GitHub licenses.

You need to configure source control for Workpace1 to use GitHub. The solution must follow the principle of least privilege. Which permissions do you require to ensure that you can commit code to GitHub?

You have five Fabric workspaces.

You are monitoring the execution of items by using Monitoring hub.

You need to identify in which workspace a specific item runs.

Which column should you view in Monitoring hub?

You have an Azure key vault named KeyVaultl that contains secrets.

You have a Fabric workspace named Workspace-!. Workspace! contains a notebook named Notebookl that performs the following tasks:

• Loads stage data to the target tables in a lakehouse

• Triggers the refresh of a semantic model

You plan to add functionality to Notebookl that will use the Fabric API to monitor the semantic model refreshes. You need to retrieve the registered application ID and secret from KeyVaultl to generate the authentication token.

Solution: You use the following code segment:

Use notebookutils.credentials.getSecret and specify the key vault URL and key vault secret. Does this meet the goal?

What should you do to optimize the query experience for the business users?